Controlling the Apple Vision Pro Headset With Eyes and Hands – Tech Live Trends

The recently announced Apple Vision Pro headset is controlled through eye tracking and hand gestures thus eliminating the need for virtual reality controllers. Just how does this work?

During its recent unveiling at the WWDC23 developer event, Apple engineers explained that the new Vision Pro headset’s visionOS operating system is controlled through a combination of eye tracking and hand gestures.

Gesture controls in Apple Vision Pro.pic.twitter.com/EW7CHsVrfx

— Tech News (@beingtechster) June 8, 2023

In the visionOS operating system, the user’s eyes are the targeting system and can be used to browse icons in the same way you’d use a mouse or hover your fingers over a touchscreen in current computing systems like laptops, tablets, and smartphones.

The user interface elements in the headset react to a gaze. When you look at them, they respond to indicate they have been selected. Besides, when you look at the menu bar, it expands. Looking at the microphone icon, for instance, will automatically trigger the speech input.

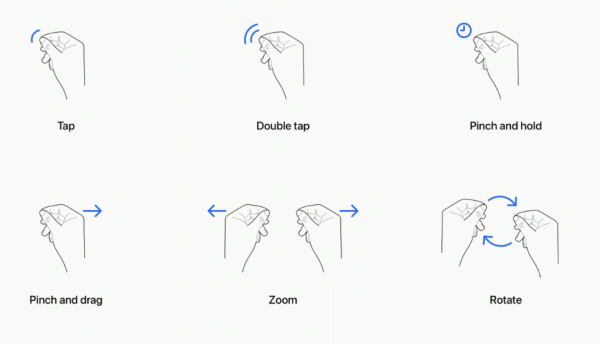

When you pinch the index finger and the thumb together, you create the equivalent of a mouse click or the pressing of a touchscreen. This creates a “click” effect on the items you are looking at.

You can also use your gaze for other critical actions such as scrolling.

To scroll with these gestures, keep your fingers pinched and then flick the wrist either up or down depending on where you want to scroll.

You can zoom in or out by pinching with your two hands close together and then moving each of your hands outwards. To rotate something, pinch your hands close together but move them upwards or downwards instead of outwards (like in the zooming action).

All of these gestures will be guided by where your eyes are gazing, thereby allowing you to precisely control the user interface without the need to raise your hands up in the air or rely on laser pointer controllers.

This is a neat and seamless implementation of AR/VR interactions and it is possible thanks to the Vision Pro’s TrueDepth hand-tracking sensor suite along with its precise eye tracking. Meta’s Quest Pro headset theoretically uses a similar approach although its camera-based hand tracking was not necessarily reliable enough and the company wasn’t keen on investing its time in an interaction system that would not work on the Quest 2 and Quest 3 headsets that don’t have eye tracking.

Early testers of the Vision Pro headset report that the device’s combination of hand gestures and eye selection creates a more intuitive interaction system than in any of the existing headsets.

However, there are tasks that can be more effectively accomplished with hands such as entering text on visionOS by using both of your hands to type on a virtual keyboard. Apple is also recommending “direct touch” in other usage scenarios such as the inspection and manipulation of small 3D objects and the recreation of real-world interactions. Meta is also shifting its focus towards direct touch interactions through its Quest system software. However, Meta is employing this for all interactions instead of merely a few specific tasks, thereby mimicking the functionality of a touchscreen. Meta’s implementation doesn’t require eye tracking but the user must hold their hands up in the air which can be tiresome after some time.