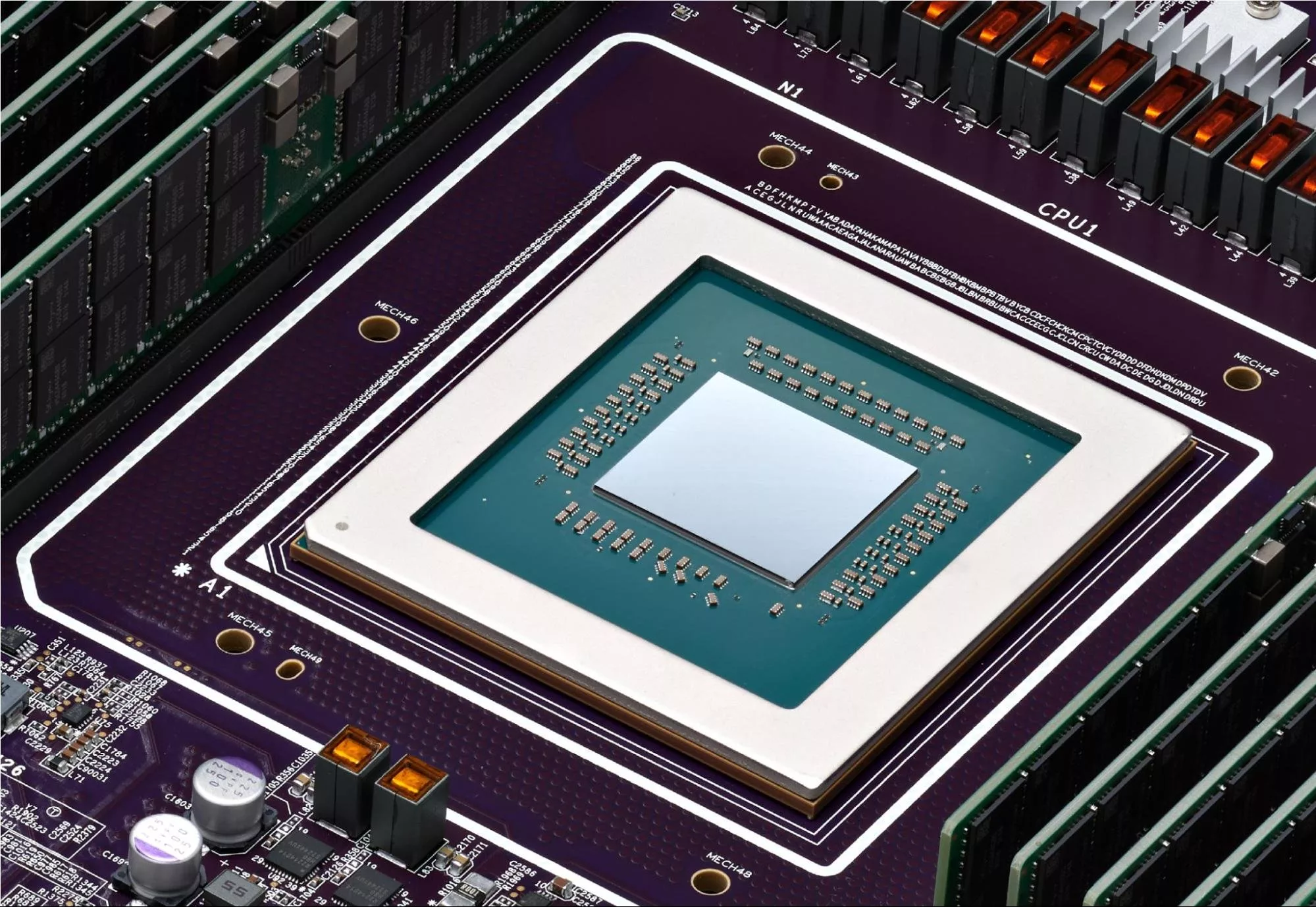

Google introduces new Arm-based AI chip

The annual Google Cloud Next conference kicked off this year with a major announcement – the unveiling of the Axion processor. This represents Google’s inaugural venture into the realm of Arm-based CPUs tailored explicitly for data center deployment.

“At Google, we constantly push the boundaries of computing, exploring what is possible for grand challenges ranging from information retrieval, global video distribution, and of course generative AI. Doing so requires rethinking systems design in deep collaboration with service developers. This rethinking has resulted in our significant investment in custom silicon. Today, we are thrilled to announce the latest incarnation of this work: Google Axion Processors, our first custom Arm-based CPUs designed for the data center. Axion delivers industry-leading performance and energy efficiency and will be available to Google Cloud customers later this year,” Amin Vahdat, VP/GM, Machine Learning, Systems, and Cloud AI at Google, wrote in an official blog post.

Built upon Arm’s Neoverse V2 CPU cores, Axion boasts impressive performance gains. Google claims a 30% performance improvement over existing general-purpose Arm-based cloud instances and a significant 50% leap in performance compared to comparable x86-based virtual machines (VMs). This translates to faster processing capabilities for a wider range of workloads running on Google Cloud, potentially benefiting everything from web applications to complex data analytics tasks. But raw performance isn’t the only story and Google reports a remarkable 60% improvement compared to current-generation x86 VMs. This serves to reduce the operational costs for cloud customers. This can be useful, especially at a time when data centers are notorious for their energy consumption.

Google’s foray into custom processing units for the cloud didn’t begin with Axion. In fact, the company has been a leader in developing specialized chips for artificial intelligence (AI) workloads for over a decade. For those who are unaware, their Tensor Processing Units (TPUs) were first introduced for internal use in data centers in 2015, before being unveiled publicly in 2016. Finally, in 2018, Google began offering TPU access to third-party developers through its Google Cloud Platform.

Axion serves a dual purpose. It strengthens Google Cloud’s offerings by providing a powerful and energy-efficient processing solution, potentially attracting new customers seeking a performance and cost-effective cloud platform. Additionally, Axion positions Google as a more independent player in the chip market, potentially reducing reliance on established chipmakers like Intel and AMD. This vertical integration allows Google to optimize chip design for its specific cloud infrastructure as well. For its part, the Mountain View-headquartered tech titan plans to integrate Axion within its cloud services in a measured way. Several internal applications, including YouTube Ads and Google Earth Engine, are already utilizing Axion. Public availability for Axion-based VM instances is expected later this year.

Traditionally, x86 processors from Intel and AMD have dominated the data center landscape. However, Arm processors, prevalent in smartphones and known for their lower power consumption and efficient instruction sets, are increasingly gaining traction in the cloud computing arena. This is because cloud workloads often prioritize efficiency over raw processing power, making Arm processors a compelling option. By embracing Arm, Google joins the ranks of cloud giants like Amazon Web Services (AWS) and Microsoft Azure, both of which have already introduced their own Arm-based CPUs – Graviton and Cobalt, respectively.