A New York Lawyer is in trouble for using ChatGPT to write legal brief

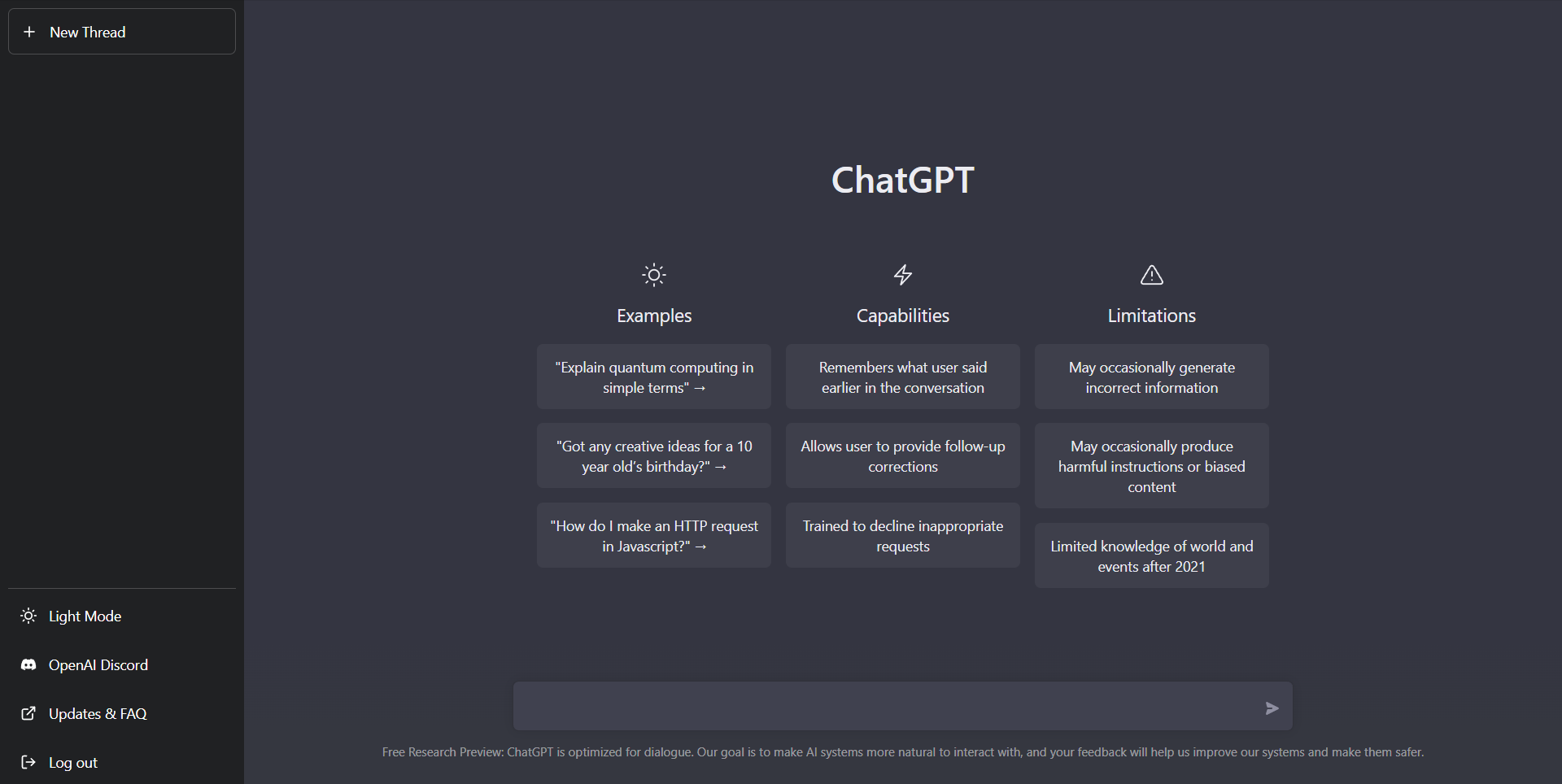

OpenAI’s ChatGPT has claimed the attention of millions ever since it was unveiled, and has successfully demonstrated the immense potential that generative AI possesses. In fact, the chatbot successfully passed exams in law and business schools as well, but its use to write legal briefs surely takes the cake. And yet, this is exactly what happened this time – a lawyer is now facing sanctions for having used ChatGPT in his legal brief.

In a case that continues to highlight the ethical challenges posed by emerging technologies and the meteoric rise of generative AI, New York lawyer Steven A. Schwartz – a practitioner of law for three decades – is now in hot waters after it was discovered that he used ChatGPT to write a legal brief containing fictitious judicial decisions, counterfeit quotes, and fake internal citations. The legal brief, according to media reports, is regarding a lawsuit filed by Roberto Mata, who sued Avianca airlines for injuries reportedly sustained from a serving cart while he was on the airline four years ago.

It is common practice for legal briefs to cite court decisions, especially references to relevant cases that support the arguments and positions presented by the lawyer(s). The situation was not so different this time – when the airline recently asked a federal judge to dismiss the case, Mata’s lawyers filed a 10-page brief arguing why the federal judge should not toss out the case and why the lawsuit should proceed. The legal brief cited more than half a dozen court decisions, including “Varghese v. China Southern Airlines,” “Martinez v. Delta Airlines” and “Miller v. United Airlines.”

All was well and good, except for one caveat – the court decisions cited in the brief did not exist. In an affidavit, Schwartz admitted that he had made use of the AI chatbot to write the brief and “supplement” his research for the case. He went on to submit it with cases that were seemingly real (but were instead generated by ChatGPT. US District Judge Kevin Castel confirmed the development, adding that “six of the submitted cases appear to be bogus judicial decisions with bogus quotes and bogus internal citations.” He also set up a hearing on June 8 to discuss potential sanctions.

Schwartz told the court that he verified the cases put forward by ChatGPT by asking it if it was lying, and upon asking for a source, ChatGPT went on to apologize for earlier confusion and insisted the case was real. The chatbot maintained its stance that the other cases were real as well, following which Schwartz confidently put them in his brief. He added that he was “unaware of the possibility that its content could be false” and that he “greatly regrets having utilized generative artificial intelligence to supplement the legal research performed herein and will never do so in the future without absolute verification of its authenticity.”

AI can be an indispensable tool for research purposes, but we have a long way to go before it is refined and stops providing inaccurate information (and maintaining that they are legitimate). The emergence of ChatGPT – which sparked an epic AI race and birthed Google’s Bard, Microsoft’s Bing Chat, and others – have led to fears that as AI continues to advance, people may find themselves out of a job. From the looks of it, however, that day is not going to come anytime soon. This development raises serious concerns about the misuse of AI in legal practice and brings attention to the ethical dilemmas surrounding AI-generated content (which is inaccurate at times, as is evident in this case). The instances of AI-generated fraudulent content have far-reaching implications, and serves as a wake-up call for legal professionals and tech organizations like OpenAI to re-evaluate their approach to the utilization of AI.

It is said that with great power comes great responsibility, and generative AI is certainly powerful in its own right. A crucial examination of the safeguards, regulations, and oversight mechanisms is necessary to prevent further use of exploitation of AI – especially generative AI – to ensure that it is driven by ethical principles.